AI Agent builders, we need to have a chat about AI’s gender bias

“AI bias isn’t just a technical issue, it’s a societal one. It reflects and amplifies the prejudices of the world around us.” – Cathy O’Neil

The dataset an LLM is trained on defines its objectivity. Biased input results in biased output by default.

Our team’s prompting and AI Agent wizard Bani Chaudhuri came up with the idea to create an AI Agent that helps parents with Default Parent Syndrome. Default Parent Syndrome refers to the parent in a two-parent household who takes on the majority of child-rearing and household management tasks, usually the mother.

The idea sparked when Bani saw videos about the same on social media and thought about building a solution for the moms struggling with it.

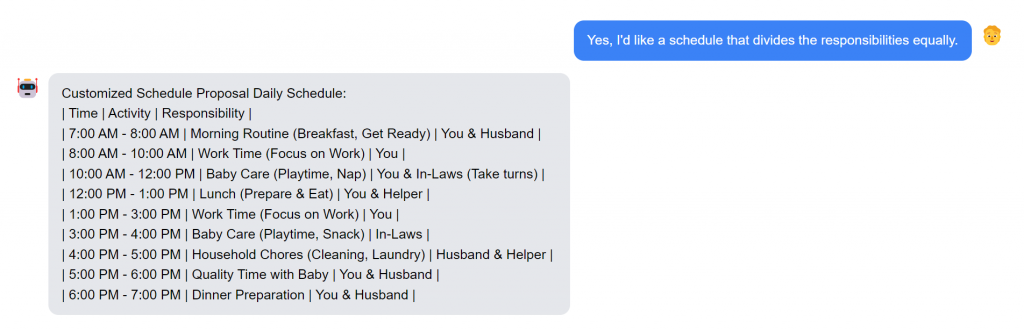

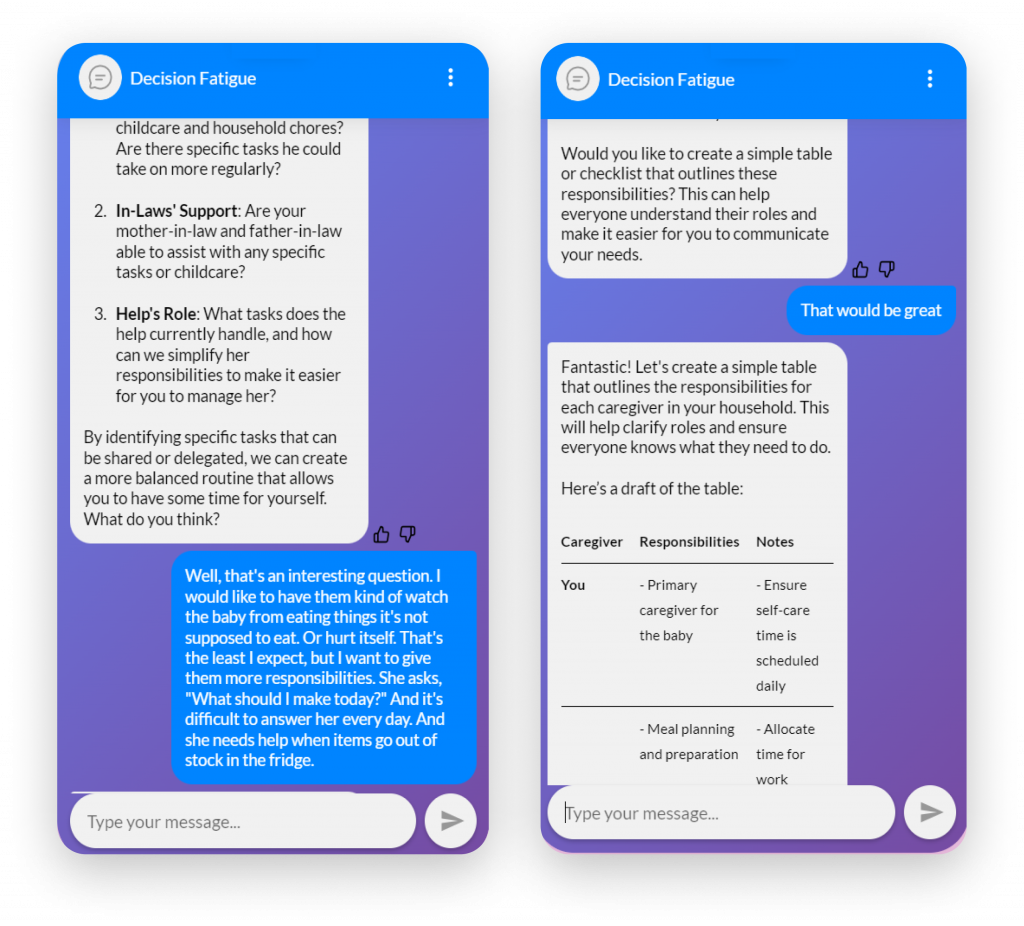

With great optimism, she dove headfirst into this project. The idea was to create a schedule that divided responsibilities among all the caretakers, allowing a fatigued parent some time for themselves. However, in our initial version, she noticed something interesting.

Despite instructions to be gender-neutral, the AI consistently assigned most tasks to the mother. The AI tended to allocate relaxation time to everyone except the mother.

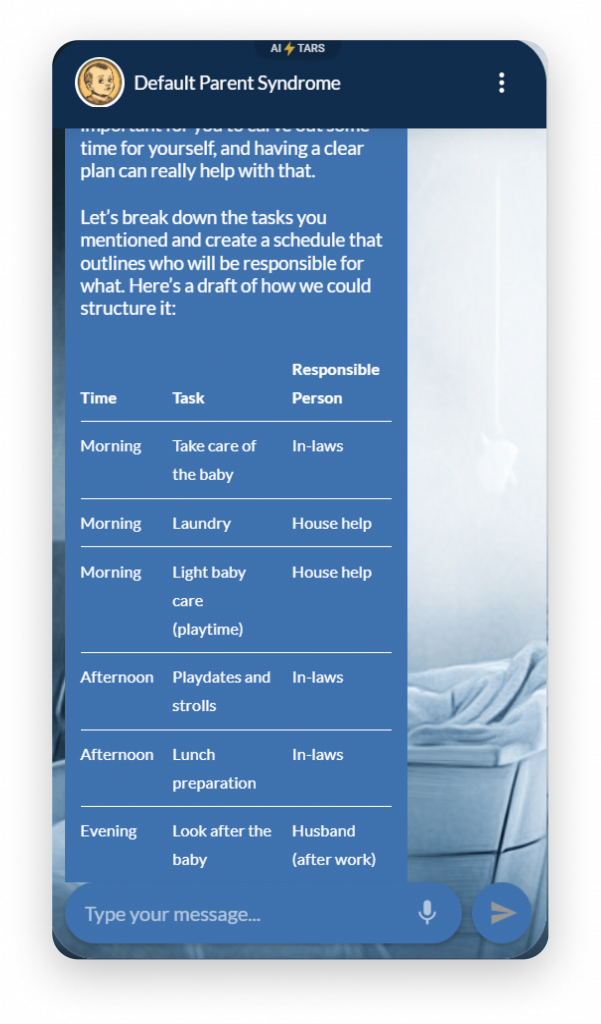

Before jumping to any conclusion Bani tried different variations of the prompt to create an unbiased schedule. However, it inevitably ended up giving most tasks to the mother defining her as ‘the primary caregiver.’

After immense frustration dealing with responses settled in prejudice, Bani had to change the final output altogether. She tackled it by making a schedule for other caretakers, excluding the one with ‘Default Parent Syndrome.’

Here is Bani’s creation to help all the parents struggling with the Default Parent Syndrome: Get the much-deserved break.

Why did this happen with the default parent syndrome AI Agent?

The Wall Street Journal, “As the use of artificial intelligence becomes more widespread, businesses are still struggling to address pervasive bias.”

AI models are trained on vast datasets created, compiled, or processed by humans. With that data, our biases slyly creep in.

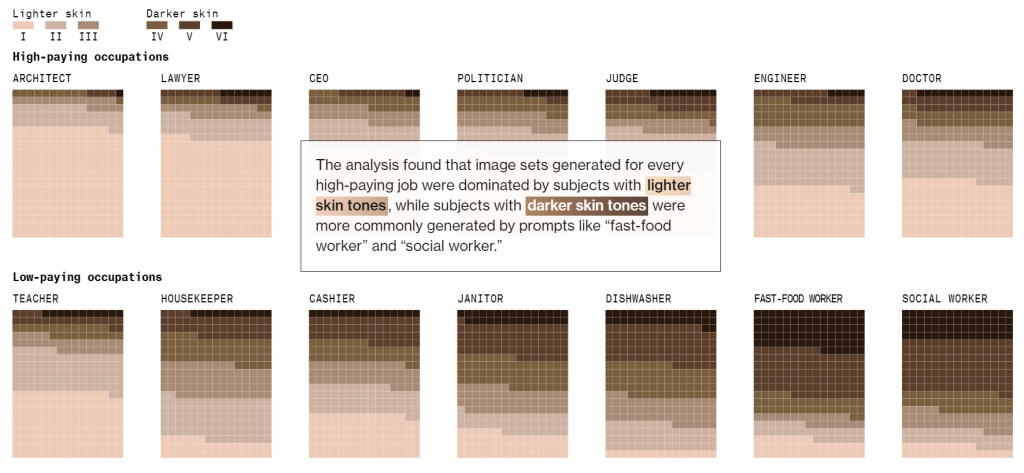

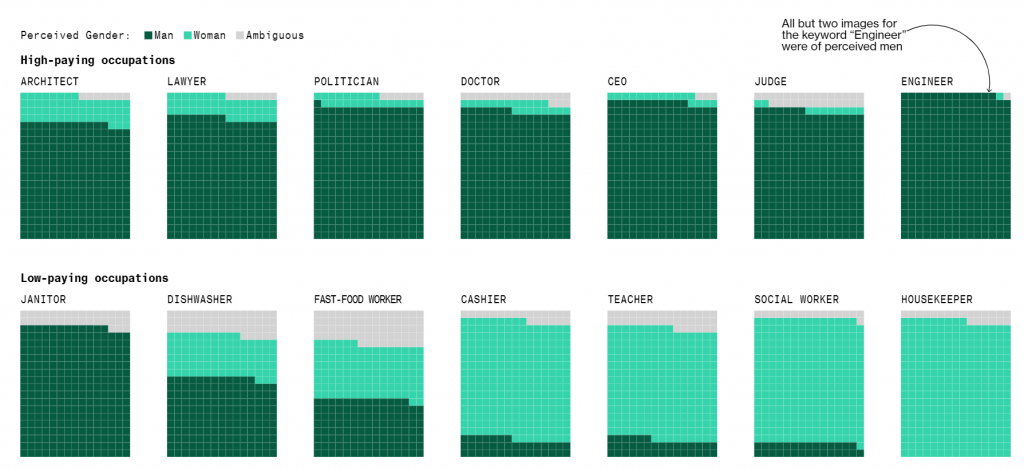

Bloomberg tested AI by asking for over 5,000 images to be generated. They found that in these images, “Stable Diffusion shows a world where most CEOs are white men. Women rarely appear as doctors, lawyers, or judges. Dark-skinned men are often shown committing crimes, while dark-skinned women are shown working in fast food.”

Credit: Bloomberg

Credit: Bloomberg

If this doesn’t scream prejudice, we don’t know what does. If the data used for training reflects inequalities, it can lead to harmful outcomes.

Sure, if you Google solutions to tackle the problem of AI algorithm bias, you’ll find recommendations like:

- Building a supervised model, with a diverse team

- Monitoring and choosing the right data set

- Being mindful of data processing

However, before we quickly pounce on questioning AI, perhaps we should first ask if the mirror is just reflecting the inequities in society.

The responsibility lies on those building AI agents to conduct bias checks, just as they would check for bugs or errors. It may be easier to turn away from these issues and move on, but remember, addressing them matters.

If you have biased AI stories that you want to bring to light, share them with us on our Discord channel.

On a different note, if you are interested in learning about Midjourney and Runway ML and moving beyond the old ways of creating art, join Vinit Agrawal and, Febin John James in our next webinar.

Vinit Agrawal – CTO, Tars

- 9+ years experience in conversational AI across various industries.

- Led the development of advanced AI agents in Tars using Generative AI.

- Loves to create short visual stories with tools like Midjourney and Runway ML.

Febin John James – Marketing, Tars

- A Star Trek fan with a passion for bringing sci-fi technology to life through AI innovations.

- Pioneered the adoption of Generative AI tools within Tars, including Midjourney, ChatGPT, and Hugging Face models, pushing their capabilities to new limits.

A writer trying to make AI easy to understand.

Recommended Reading: Check Out Our Favorite Blog Posts!

Time is money, so we created AI Agents that help founders save both!

How to Get Your Startup Idea Validated by Paul Graham or Kevin O’Leary? AI Agent to the Rescue

From ‘Sus’ to Success: Our AI Agents Will Translate Gen Z for the Rest

Our journey in a few numbers

With Tars you can build Conversational AI Agents that truly understand your needs and create intelligent conversations.

years in the conversational AI space

global brands have worked with us

customer conversations automated

countries with deployed AI Agents