AI Agent evaluation: A complete guide to measuring performance

AI Agents are everywhere now. They’re answering customer support tickets, booking flights, and even writing code. But here’s the problem: not everything out there is good.

The difference between a useful Agent and a frustrating one comes down to evaluation. You need to know what’s working, what’s broken, and how to fix it. This guide will show you how to evaluate AI Agents properly, so you can build ones that actually work.

What makes AI Agents different?

AI Agents aren’t just chatbots that give you answers. They’re more like digital assistants that can actually do things. They can call APIs, use tools, remember context across conversations, and make decisions about what to do next.

This complexity is what makes them powerful, but it’s also what makes evaluation tricky. When a regular language model fails, you usually know why – the answer is wrong or doesn’t make sense. When an Agent fails, it could be because it chose the wrong tool, used it incorrectly, or got confused somewhere in a multi-step process.

The stakes are higher, too. A bad chatbot might give you the wrong answer. A bad Agent might book the wrong flight, delete important files, or cost you money through unnecessary API calls.

The complexity of Agent evaluation vs traditional LLM evaluation

LLM Agent evaluation is different from evaluating regular LLM apps (think RAG, chatbots) since they are composed of complex, multi-component architectures. Understanding what makes Agents fundamentally different is crucial for effective evaluation:

- Architectural complexity: Agents are built from multiple components, often chained together in intricate workflows

- Tool usage: They can invoke external tools and APIs to complete tasks

- Autonomy: Agents operate with minimal human input, making dynamic decisions on what to do next

- Reasoning frameworks: They often rely on advanced planning or decision-making strategies to guide behavior

As a result, LLM Agents are evaluated at two distinct levels:

- End-to-end evaluation: Treats the entire system as a black box, focusing on whether the overall task was completed successfully, given a specific input.

- Component-level evaluation: Examines individual parts (like sub-agents, RAG pipelines, or API calls) to identify where failures or bottlenecks occur.

Agent Autonomy Levels and Their Evaluation Requirements

LLM agents can be classified into 4 distinct levels, each successively more advanced and autonomous than the last. Understanding these levels is crucial for determining appropriate evaluation strategies:

Level 1: Generator Agents

Most LLM Agents in production today are Generator Agents. These include basic customer support chatbots and RAG-based applications. Agents at this level are purely reactive, responding to user queries without any ability to reflect, refine, or improve beyond their training data or provided context.

Level 2: Tool-Calling Agents

When people talk about LLM Agents, they’re usually referring to Tool-Calling Agents this is where most AI development is happening today. These agents can decide when to retrieve information from APIs, databases, or search engines and can execute tasks using external tools, such as booking a flight, browsing the web, or running calculations.

Level 3: Planning Agents

Planning agents take AI beyond simple tool use by structuring multi-step workflows and making results-based execution choices. Unlike Tool-Calling Agents, they detect state changes, refine their approach, and sequence tasks intelligently.

Level 4: Autonomous Agents

Autonomous Agents don’t just follow commands, they initiate actions, persist across sessions, and adapt based on feedback. Unlike lower-level Agents, they can execute tasks without needing constant user input.

Setting up observability: The foundation

Before you can evaluate an Agent, you need to see what it’s actually doing. This is called observability, and it’s like having a detailed log of every action your Agent takes.

Think of it this way: when your Agent processes a request, it creates a “trace” – a complete record of everything that happened from start to finish. Within that trace are “spans” – individual actions like calling a tool or generating text

Key metrics you should track

Here are the metrics that actually matter for Agent evaluation:

Performance basics

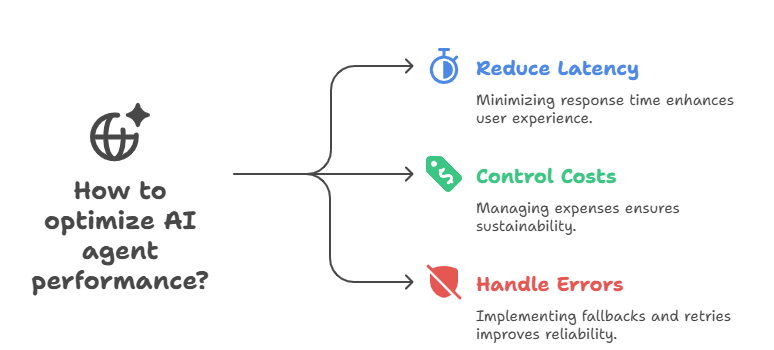

- Latency: How quickly does the agent respond? Long waiting times negatively impact user experience. You should measure latency for tasks and individual steps by tracing agent runs.

- Costs: What’s the expense per agent run? AI Agents rely on LLM calls billed per token or external APIs. Frequent tool usage or multiple prompts can rapidly increase costs.

- Request Errors: How many requests did the Agent fail? This can include API errors or failed tool calls. To make your agent more robust against these in production, you can then set up fallbacks or retries.

Quality measures

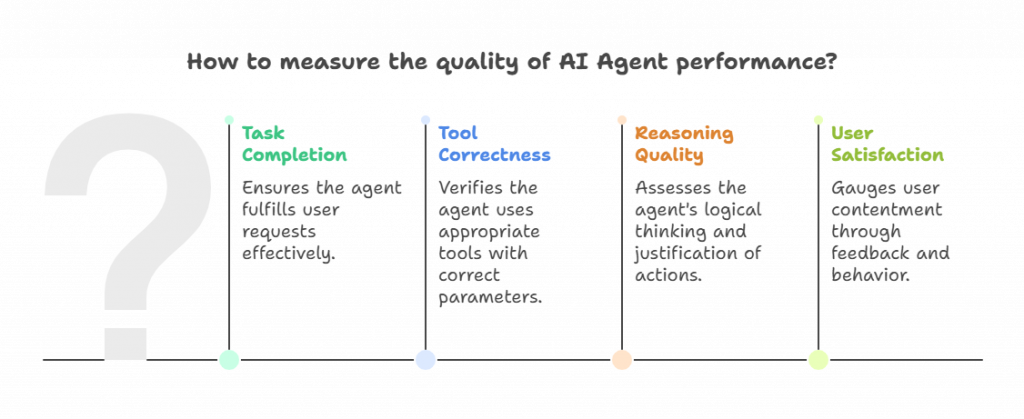

Task completion – Did the Agent actually accomplish what the user asked for? This sounds simple, but can be tricky to measure consistently.

Tool correctness – When the Agent decided to use a tool, was it the right choice? Did it provide the correct parameters?

Reasoning quality – For more advanced Agents, does the step-by-step thinking make sense? Is each action justified?

User satisfaction – Both explicit feedback (thumbs up/down, ratings) and implicit signals (do users retry, rephrase questions, or abandon the conversation?).

Evaluating tool usage

For Tool-calling Agents (Level 2), tool evaluation is critical. There are two main aspects:

Tool correctness

Did the Agent choose the right tools and use them properly? You can evaluate this at different levels of strictness:

- Basic tool selection: Did it pick the right tools from available options?

- Parameter accuracy: Were the inputs to each tool correct?

- Output validation: Did the tools return the expected results?

This is mostly deterministic – you can check objectively whether the Agent called the right APIs with the right parameters. The challenge is defining what “right” means for your specific use case.

Tool efficiency

Correctness isn’t enough if your Agent takes forever or wastes money. Tool efficiency measures whether the Agent is using tools in the smartest way possible.

Common efficiency problems include:

- Redundant calls: Using the same tool multiple times when once would do

- Wrong sequence: Calling tools in an illogical order that slows things down

- Unnecessary tools: Using complex tools when simple ones would work

You can measure some of this automatically (counting duplicate calls), but for complex workflows, you might need to use another language model to judge whether the Agent’s approach was efficient.

Task completion evaluation

This is the big question: did the Agent actually accomplish what the user wanted?

For simple, well-defined tasks, this can be straightforward. If someone asks to book a flight from New York to London, either the flight got booked or it didn’t. But real-world tasks are messier.

Consider a user asking an Agent to “help me plan a weekend trip to Paris.” Success could mean:

- Finding flights and hotels

- Suggesting activities and restaurants

- Providing a detailed itinerary

- Just giving general travel advice

The key is defining success criteria upfront. For narrow use cases, you can create test datasets with clear right and wrong answers. For more open-ended applications, you might need to use language models to judge whether tasks were completed satisfactorily.

Reasoning evaluation

In real-world scenarios, your LLM agent’s reasoning is shaped by much more than just the model itself. Things like the prompt template (e.g., chain-of-thought reasoning), tool usage, and the agent’s architecture all play critical roles.

Key reasoning metrics include:

- Reasoning Relevancy: Whether reasoning behind each tool call clearly ties to user requests

- Reasoning Coherence: Whether reasoning follows a logical, step-by-step process that adds value

Agentic reasoning is somewhat important at Level 2 tool-calling agents—though variability is limited by standardized frameworks like ReAct. It becomes increasingly critical at Level 3, as agents take on a planning role and intermediate reasoning steps grow both more important and more domain-specific.

Two approaches: Online vs offline evaluation

You can evaluate Agents in two main ways:

Offline evaluation

Test your Agent on curated datasets where you know the right answers. This is like giving it a standardized test. It’s repeatable, measurable, and good for catching regressions before deployment.

The downside? Your test data might not reflect real-world usage. Agents that ace your test cases might still confuse real users with unexpected questions.

Online evaluation

Monitor your Agent’s performance with real users in production. This gives you authentic feedback about how well it works in practice, but it’s harder to get reliable measurements.

You’ll rely more on user feedback, behavioral signals (like retry rates), and downstream metrics (like whether users complete their intended actions).

The best approach: Both

Smart teams use offline evaluation for development and quality gates, then online monitoring to catch issues the tests missed. Create a feedback loop: production failures become new test cases, which improves your offline evaluation over time.

Component-level evaluation

Here’s something crucial that many people miss: you need to evaluate individual components, not just the whole system.

Modern Agents are complex systems with multiple pieces – retrievers, generators, tools, and sub-agents. If your overall performance is poor, you need to know which specific component is the problem.

For example, if your Agent has low task completion rates, is it because:

- The retriever isn’t finding relevant information?

- The reasoning component is making poor decisions.

- A specific tool isn’t working properly?

- The final response generator isn’t synthesizing information well?

Component-level evaluation, combined with good tracing, helps you identify the actual bottlenecks instead of guessing.

Custom evaluation with natural language

Sometimes you need to evaluate something specific to your use case. The G-Eval framework lets you define custom evaluation criteria in plain English, then uses language models to score your Agent’s performance.

For instance, with a restaurant booking Agent, you might want to ensure it always explains what alternatives it checked when a restaurant is fully booked. You can define this requirement in natural language, and the evaluation system will check whether each interaction meets this standard.

This is particularly useful for subjective qualities like helpfulness, professionalism, or brand consistency that are important for your specific application.

Common evaluation challenges

Handling subjectivity

Different people have different standards for what makes a good Agent response. One person might want detailed explanations; another might prefer brief answers.

Solutions include training evaluators on consistent standards, using multiple evaluators and averaging their scores, and creating detailed rubrics that spell out exactly what you’re looking for.

Managing costs and scale

Thorough evaluation can get expensive, especially with human reviewers and extensive testing. You need to balance depth with practicality.

Start with automated metrics to filter obviously good or bad cases, then focus human evaluation on edge cases and subjective qualities. Use small test sets for quick checks during development, and larger ones for major releases.

Dealing with changing performance

Agents aren’t static. They get updated, their underlying models change, and their environment shifts over time. You need ongoing monitoring to catch performance drift.

Set up automated alerts for key metrics, run regular evaluation cycles, and maintain both historical baselines and current benchmarks.

Building a practical evaluation system

Here’s how to set up Agent evaluation in practice:

- Start with observability: Get tracing set up so you can see what your Agent is actually doing

- Define success clearly: What does good performance look like for your specific use case?

- Mix evaluation types: Combine automated metrics, human evaluation, and real-world testing

- Evaluate components separately: Don’t just look at end-to-end performance

- Monitor continuously: Set up ongoing measurement, not just pre-deployment testing

- Create feedback loops: Use production insights to improve your evaluation and your Agent

What’s next for Agent evaluation

The field is moving fast. New evaluation techniques are emerging for things like creativity, common sense reasoning, and multi-agent interactions.

We’re also seeing better integration between evaluation and development tools. Instead of evaluation being a separate step, it’s becoming part of the development process itself, providing real-time feedback as you build and improve Agents.

Safety evaluation is becoming more important as Agents gain more autonomy and access to real systems. We need better ways to ensure Agents behave safely and align with human values, especially as they become more powerful.

Making it work

Building good Agents is hard. Evaluating them properly is what separates the teams that build useful systems from those that build expensive demos.

The key is being systematic about it. Don’t just test whether your Agent works with a few example inputs. Set up proper observability, define clear success criteria, evaluate both the overall system and individual components, and keep measuring as things change.

It takes more work upfront, but it’s the difference between an Agent that actually helps users and one that frustrates them. And in a world where AI Agents are becoming more common, that difference matters more every day.

Remember: the goal isn’t to build the most sophisticated Agent. It’s to build one that reliably does what users need it to do. Good evaluation helps you get there.

A writer trying to make AI easy to understand.

- What makes AI Agents different?

- The complexity of Agent evaluation vs traditional LLM evaluation

- Agent Autonomy Levels and Their Evaluation Requirements

- Level 1: Generator Agents

- Level 2: Tool-Calling Agents

- Level 3: Planning Agents

- Level 4: Autonomous Agents

- Setting up observability: The foundation

- Key metrics you should track

- Performance basics

- Quality measures

- Evaluating tool usage

- Tool correctness

- Tool efficiency

- Task completion evaluation

- Reasoning evaluation

- Two approaches: Online vs offline evaluation

- Offline evaluation

- Online evaluation

- The best approach: Both

- Component-level evaluation

- Custom evaluation with natural language

- Common evaluation challenges

- Handling subjectivity

- Managing costs and scale

- Dealing with changing performance

- Building a practical evaluation system

- What’s next for Agent evaluation

- Making it work

Build innovative AI Agents that deliver results

Get started for freeRecommended Reading: Check Out Our Favorite Blog Posts!

10 best alternatives to Intercom (Fin AI) for AI-powered customer service [2025]

Customer experience automation: The complete guide to CXA in 2025

How to measure customer experience: The complete guide for AI-powered support and growth

Our journey in a few numbers

With Tars you can build Conversational AI Agents that truly understand your needs and create intelligent conversations.

years in the conversational AI space

global brands have worked with us

customer conversations automated

countries with deployed AI Agents